|

Microscopy Image Browser 2.91

MIB

|

|

Microscopy Image Browser 2.91

MIB

|

class is a template class for using with GUI developed using appdesigner of Matlab More...

Public Member Functions | |

| function [ lgraph , outputPatchSize ] = | createNetwork (previewSwitch) |

| generate network Parameters: previewSwitch: logical switch, when 1 - the generated network is only for preview, i.e. weights of classes won't be calculated | |

| function net = | generateDeepLabV3Network (imageSize, numClasses, targetNetwork) |

| generate DeepLab v3+ convolutional neural network for semantic image segmentation of 2D RGB images | |

| function net = | generate3DDeepLabV3Network (imageSize, numClasses, downsamplingFactor, targetNetwork) |

| generate a hybrid 2.5D DeepLabv3+ convolutional neural network for semantic image segmentation. The training data should be a small substack of 3,5,7 etc slices, where only the middle slice is segmented. As the update, a 2 blocks of 3D convolutions were added before the standard DLv3 | |

| function [ net , outputSize ] = | generateUnet2DwithEncoder (imageSize, encoderNetwork) |

| generate Unet convolutional neural network for semantic image segmentation of 2D RGB images using a specified encoder | |

| function [ patchOut , info , augList , augPars ] = | mibDeepAugmentAndCrop3dPatchMultiGPU (info, inputPatchSize, outputPatchSize, mode, options) |

| function [ patchOut , info , augList , augPars ] = | mibDeepAugmentAndCrop2dPatchMultiGPU (info, inputPatchSize, outputPatchSize, mode, options) |

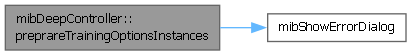

| function TrainingOptions = | preprareTrainingOptionsInstances (valDS) |

| prepare trainig options for training of the instance segmentation network | |

| mibDeepController (mibModel, varargin) | |

| function | closeWindow () |

| update preferences structure | |

| function | dnd_files_callback (hWidget, dragIn) |

| drag and drop config name to obj.View.handles.NetworkPanel to load it | |

| function | updateWidgets () |

| update widgets of this window | |

| function | activationLayerChangeCallback () |

| callback for modification of the Activation Layer dropdown | |

| function | setSegmentationLayer () |

| callback for modification of the Segmentation Layer dropdown | |

| function | toggleAugmentations () |

| callback for press of the T_augmentation checkbox | |

| function | updateBatchOptFromGUI (event) |

| function | singleModelTrainingFileValueChanged (event) |

| callback for press of SingleModelTrainingFile | |

| function | selectWorkflow (event) |

| select deep learning workflow to perform | |

| function | selectArchitecture (event) |

| select the target architecture | |

| function | returnBatchOpt (BatchOptOut) |

return structure with Batch Options and possible configurations via the notify syncBatch event Parameters: BatchOptOut: a local structure with Batch Options generated during Continue callback. It may contain more fields than obj.BatchOpt structure | |

| function | bioformatsCallback (event) |

| update available filename extensions | |

| function net = | selectNetwork (networkName) |

| select a filename for a new network in the Train mode, or select a network to use for the Predict mode | |

| function | selectDirerctories (event) |

| function | updateImageDirectoryPath (event) |

| update directories with images for training, prediction and results | |

| function | checkNetwork (fn) |

| generate and check network using settings in the Train tab | |

| function lgraph = | updateNetworkInputLayer (lgraph, inputPatchSize) |

| update the input layer settings for lgraph paramters are taken from obj.InputLayerOpt | |

| function lgraph = | updateActivationLayers (lgraph) |

| update the activation layers depending on settings in obj.BatchOpt.T_ActivationLayer and obj.ActivationLayerOpt | |

| function lgraph = | updateConvolutionLayers (lgraph) |

| update the convolution layers by providing new set of weight initializers | |

| function lgraph = | updateMaxPoolAndTransConvLayers (lgraph, poolSize) |

| update maxPool and TransposedConvolution layers depending on network downsampling factor only for U-net and SegNet. This function is applied when the network downsampling factor is different from 2 | |

| function lgraph = | updateSegmentationLayer (lgraph, classNames) |

| redefine the segmentation layer of lgraph based on obj.BatchOpt settings | |

| function [ status , augNumber ] = | setAugFuncHandles (mode, augOptions) |

| define list of 2D/3D augmentation functions | |

| function [ dataOut , info ] = | classificationAugmentationPipeline (dataIn, info, inputPatchSize, outputPatchSize, mode) |

| function | setAugmentation3DSettings () |

| update settings for augmentation fo 3D images | |

| function | setActivationLayerOptions () |

| update options for the activation layers | |

| function | setSegmentationLayerOptions () |

| update options for the activation layers | |

| function | setAugmentation2DSettings () |

| update settings for augmentation fo 2D images | |

| function | setInputLayerSettings () |

| update init settings for the input layer of networks | |

| function | setTrainingSettings () |

| update settings for training of networks | |

| function | start (event) |

| start calcualtions, depending on the selected tab preprocessing, training, or prediction is initialized | |

| function imgOut = | channelWisePreProcess (imgIn) |

| Normalize images As input has 4 channels (modalities), remove the mean and divide by the standard deviation of each modality independently. | |

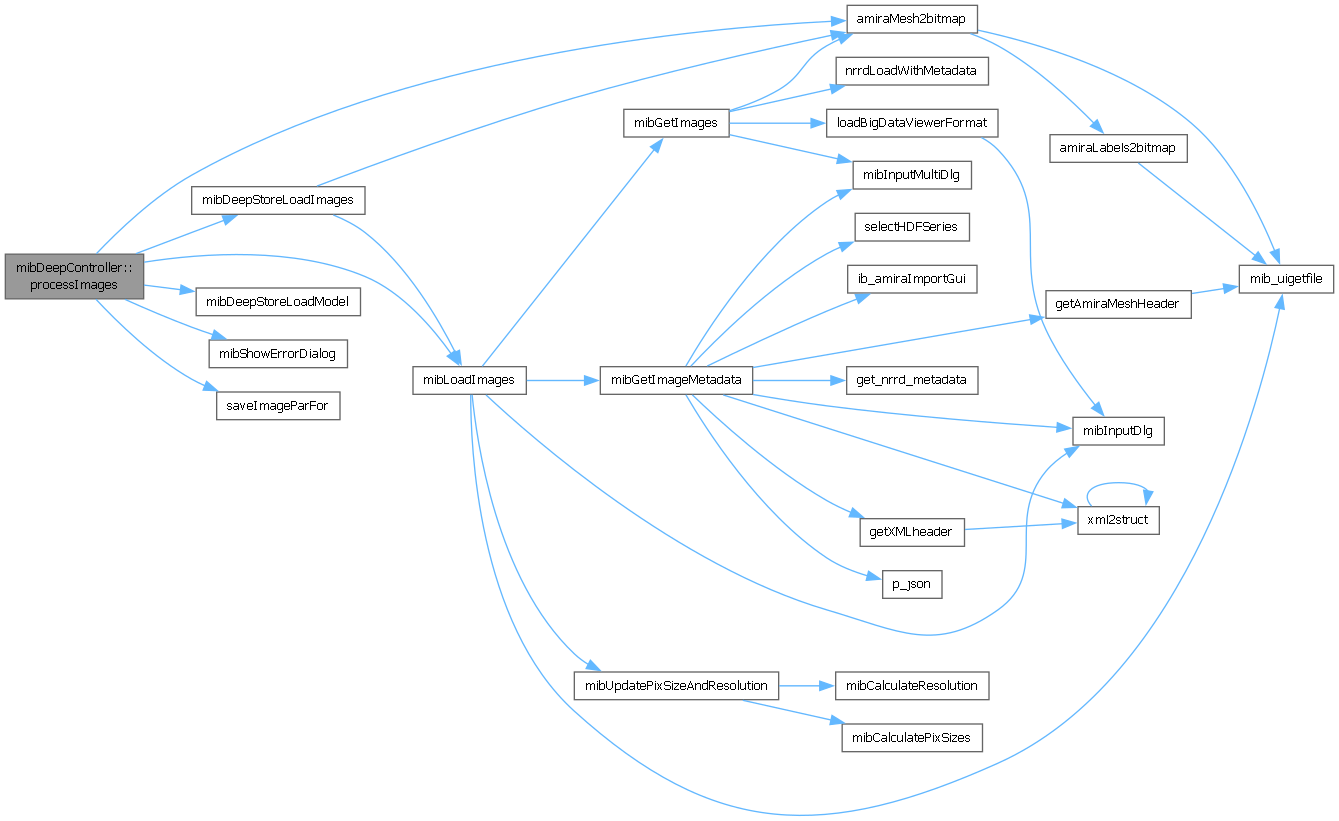

| function | processImages (preprocessFor) |

| Preprocess images for training and prediction. | |

| function | startPreprocessing () |

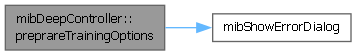

| function TrainingOptions = | preprareTrainingOptions (valDS) |

| prepare trainig options for the network training | |

| function | updatePreprocessingMode () |

| callback for change of selection in the Preprocess for dropdown | |

| function | saveConfig (configName) |

| save Deep MIB configuration to a file | |

| function res = | correctBatchOpt (res) |

| correct loaded BatchOpt structure if it is not compatible with the current version of DeepMIB | |

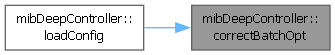

| function | loadConfig (configName) |

| load config file with Deep MIB settings | |

| function | startPredictionBlockedImage () |

| predict 2D/3D datasets using the blockedImage class requires R2021a or newer | |

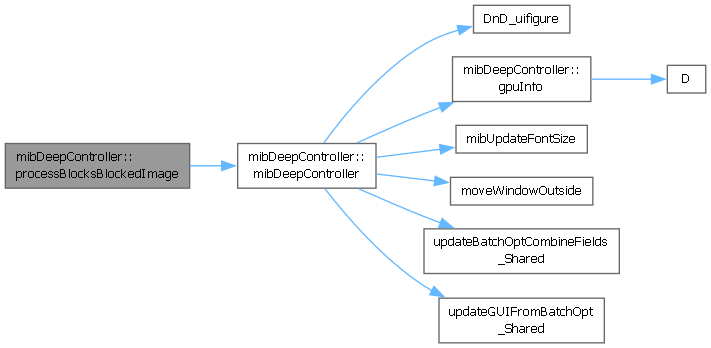

| function [ outputLabels , scoreImg ] = | processBlocksBlockedImage (vol, zValue, net, inputPatchSize, outputPatchSize, blockSize, padShift, dataDimension, patchwiseWorkflowSwitch, patchwisePatchesPredictSwitch, classNames, generateScoreFiles, executionEnvironment, fn) |

| dataDimension: numeric switch that identify dataset dimension, can be 2, 2.5, 3 | |

| function | startPrediction2D () |

| predict datasets for 2D taken to a separate function for better performance | |

| function | startPrediction3D () |

| predict datasets for 3D networks taken to a separate function to improve performance | |

| function bls = | generateDynamicMaskingBlocks (vol, blockSize, noColors) |

| generate blocks using dynamic masking parameters acquired in obj.DynamicMaskOpt | |

| function | previewPredictions () |

| load images of prediction scores into MIB | |

| function | previewModels (loadImagesSwitch) |

| load images for predictions and the resulting modelsinto MIB | |

| function | evaluateSegmentationPatches () |

| evaluate segmentation results for the patches in the patch-wise mode | |

| function | evaluateSegmentation () |

| evaluate segmentation results by comparing predicted models with the ground truth models | |

| function | selectGPUDevice () |

| select environment for computations | |

| function | gpuInfo () |

| display information about the selected GPU | |

| function | exportNetwork () |

| convert and export network to ONNX or TensorFlow formats | |

| function | transferLearning () |

| perform fine-tuning of the loaded network to a different number of classes | |

| function | importNetwork () |

| import an externally trained or designed network to be used with DeepMIB | |

| function | updateDynamicMaskSettings () |

| update settings for calculation of dynamic masks during prediction using blockedimage mode the settings are stored in obj.DynamicMaskOpt | |

| function | saveCheckpointNetworkCheck () |

| callback for press of Save checkpoint networks (obj.View.handles.T_SaveProgress) | |

| function | previewDynamicMask () |

| preview results for the dynamic mode | |

| function | exploreActivations () |

| explore activations within the trained network | |

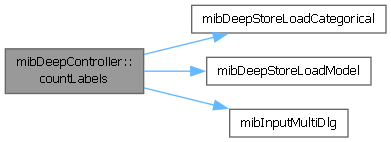

| function | countLabels () |

| count occurrences of labels in model files callback for press of the "Count labels" in the Options panel | |

| function | balanceClasses () |

| balance classes before training see example from here: https://se.mathworks.com/help/vision/ref/balancepixellabels.html | |

| function | customTrainingProgressWindow_Callback (event) |

| callback for click on obj.View.handles.O_CustomTrainingProgressWindow checkbox | |

| function | previewImagePatches_Callback (event) |

| callback for value change of obj.View.handles.O_PreviewImagePatches | |

| function | sendReportsCallback () |

| define parameters for sending progress report to the user's email address | |

| function | helpButton_callback () |

| show Help sections | |

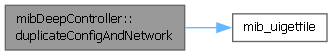

| function | duplicateConfigAndNetwork () |

| copy the network file and its config to a new filename | |

Static Public Member Functions | |

| static function | viewListner_Callback (obj, src, evnt) |

| static function data = | tif3DFileRead (filename) |

| data = tif3DFileRead(filename) custom reading function to load tif files with stack of images used in evaluate segmentation function | |

| static function [ outputLabeledImageBlock , scoreBlock ] = | segmentBlockedImage (block, net, dataDimension, patchwiseWorkflowSwitch, generateScoreFiles, executionEnvironment, padShift) |

| test function for utilization of blockedImage for prediction The input block will be a batch of blocks from the a blockedImage. | |

Public Attributes | |

| mibModel | |

| handles to mibModel | |

| mibController | |

| handle to mib controller | |

| View | |

| handle to the view / mibDeepGUI | |

| listener | |

| a cell array with handles to listeners | |

| childControllers | |

| list of opened subcontrollers | |

| childControllersIds | |

| a cell array with names of initialized child controllers | |

| availableArchitectures | |

containers.Map with available architectures keySet = {2D Semantic, 2.5D Semantic, 3D Semantic, 2D Patch-wise, 2D Instance}; valueSet{1} = {DeepLab v3+, SegNet, U-net, U-net +Encoder}; old: {U-net, SegNet, DLv3 Resnet18, DLv3 Resnet50, DLv3 Xception, DLv3 Inception-ResNet-v2} valueSet{2} = {Z2C + DLv3, Z2C + DLv3, Z2C + U-net, Z2C + U-net +Encoder}; % 3DC + DLv3 Resnet18' valueSet{3} = {U-net, U-net Anisotropic} valueSet{4} = {Resnet18, Resnet50, Resnet101, Xception} valueSet{5} = {SOLOv2} old: {SOLOv2 Resnet18, SOLOv2 Resnet50}; | |

| availableEncoders | |

containers.Map with available encoders keySet - is a mixture of workflow -space- architecture {2D Semantic DeepLab v3+, 2D Semantic U-net +Encoder} encoders for each "workflow -space- architecture" combination, the last value shows the selected encoder encodersList{1} = {Resnet18, Resnet50, Xception, InceptionResnetv2, 1}; % for 2D Semantic DeepLab v3+ encodersList{2} = {Classic, Resnet18, Resnet50, 2}; % for 2D Semantic U-net +Encoder encodersList{3} = {Resnet18, Resnet50, 1}; % for 2D Instance SOLOv2' | |

| BatchOpt | |

a structure compatible with batch operation name of each field should be displayed in a tooltip of GUI it is recommended that the Tags of widgets match the name of the fields in this structure .Parameter - [editbox], char/string .Checkbox - [checkbox], logical value true or false .Dropdown{1} - [dropdown], cell string for the dropdown .Dropdown{2} - [optional], an array with possible options .Radio - [radiobuttons], cell string Radio1 or Radio2... .ParameterNumeric{1} - [numeric editbox], cell with a number .ParameterNumeric{2} - [optional], vector with limits [min, max] .ParameterNumeric{3} - [optional], string on - to round the value, off to do not round the value | |

| AugOpt2D | |

| a structure with augumentation options for 2D unets, default obtained from obj.mibModel.preferences.Deep.AugOpt2D, see getDefaultParameters.m .FillValue = 0; .RandXReflection = true; .RandYReflection = true; .RandRotation = [-10, 10]; .RandScale = [.95 1.05]; .RandXScale = [.95 1.05]; .RandYScale = [.95 1.05]; .RandXShear = [-5 5]; .RandYShear = [-5 5]; | |

| Aug2DFuncNames | |

| cell array with names of 2D augmenter functions | |

| Aug2DFuncProbability | |

| probabilities of each 2D augmentation action to be triggered | |

| Aug3DFuncNames | |

| cell array with names of 2D augmenter functions | |

| Aug3DFuncProbability | |

| probabilities of each 3D augmentation action to be triggered | |

| gpuInfoFig | |

| a handle for GPU info window | |

| AugOpt3D | |

| .Fraction = .6; % augment 60% of patches .FillValue = 0; .RandXReflection = true; .RandYReflection = true; .RandZReflection = true; .Rotation90 = true; .ReflectedRotation90 = true; | |

| ActivationLayerOpt | |

| options for the activation layer | |

| DynamicMaskOpt | |

options for calculation of dynamic masks for prediction using blocked image mode .Method = Keep above threshold; % Keep above threshold or Keep below threshold .ThresholdValue = 60; .InclusionThreshold = 0.1; % Inclusion threshold for mask blocks | |

| SegmentationLayerOpt | |

| options for the segmentation layer | |

| InputLayerOpt | |

a structure with settings for the input layer .Normalization = zerocenter; .Mean = []; .StandardDeviation = []; .Min = []; .Max = []; | |

| modelMaterialColors | |

| colors of materials | |

| PatchPreviewOpt | |

structure with preview patch options .noImages = 9, number of images in montage .imageSize = 160, patch size for preview .labelShow = true, display overlay labels with details .labelSize = 9, font size for the label .labelColor = black, color of the label .labelBgColor = yellow, color of the label background .labelBgOpacity = 0.6; % opacity of the background | |

| SendReports | |

send email reports with progress of the training process .T_SendReports = false; .FROM_email = 'user@.nosp@m.gmai.nosp@m.l.com'; .SMTP_server = smtp-relay.brevo.com; .SMTP_port = 587; .SMTP_auth = true; .SMTP_starttls = true; .SMTP_sername = 'user@.nosp@m.gmai.nosp@m.l.com'; .SMTP_password = '; .sendWhenFinished = false; .sendDuringRun = false; | |

| TrainingOpt | |

a structure with training options, the default ones are obtained from obj.mibModel.preferences.Deep.TrainingOpt, see getDefaultParameters.m .solverName = adam; .MaxEpochs = 50; .Shuffle = once; .InitialLearnRate = 0.0005; .LearnRateSchedule = piecewise; .LearnRateDropPeriod = 10; .LearnRateDropFactor = 0.1; .L2Regularization = 0.0001; .Momentum = 0.9; .ValidationFrequency = 400; .Plots = training-progress; | |

| TrainingProgress | |

| a structure to be used for the training progress plot for the compiled version of MIB .maxNoIter = max number of iteractions for during training .iterPerEpoch - iterations per epoch .stopTraining - logical switch that forces to stop training and save all progress | |

| wb | |

| handle to waitbar | |

| colormap6 | |

| colormap for 6 colors | |

| colormap20 | |

| colormap for 20 colors | |

| colormap255 | |

| colormap for 255 colors | |

| sessionSettings | |

| structure for the session settings .countLabelsDir - directory with labels to count, used in count labels function | |

| TrainEngine | |

temp property to test trainnet function for training: can be trainnet or trainNetwork | |

| EVENT | closeEvent |

| > Description of events event firing when window is closed | |

Public Attributes inherited from handle Public Attributes inherited from handle | |

| addlistener | |

| Creates a listener for the specified event and assigns a callback function to execute when the event occurs. | |

| notify | |

| Broadcast a notice that a specific event is occurring on a specified handle object or array of handle objects. | |

| delete | |

| Handle object destructor method that is called when the object's lifecycle ends. | |

| disp | |

| Handle object disp method which is called by the display method. See the MATLAB disp function. | |

| display | |

| Handle object display method called when MATLAB software interprets an expression returning a handle object that is not terminated by a semicolon. See the MATLAB display function. | |

| findobj | |

| Finds objects matching the specified conditions from the input array of handle objects. | |

| findprop | |

| Returns a meta.property objects associated with the specified property name. | |

| fields | |

| Returns a cell array of string containing the names of public properties. | |

| fieldnames | |

| Returns a cell array of string containing the names of public properties. See the MATLAB fieldnames function. | |

| isvalid | |

| Returns a logical array in which elements are true if the corresponding elements in the input array are valid handles. This method is Sealed so you cannot override it in a handle subclass. | |

| eq | |

| Relational functions example. See details for more information. | |

| transpose | |

| Transposes the elements of the handle object array. | |

| permute | |

| Rearranges the dimensions of the handle object array. See the MATLAB permute function. | |

| reshape | |

| hanges the dimensions of the handle object array to the specified dimensions. See the MATLAB reshape function. | |

| sort | |

| ort the handle objects in any array in ascending or descending order. | |

class is a template class for using with GUI developed using appdesigner of Matlab

or

or

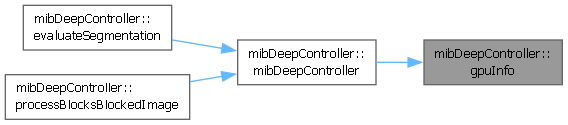

| mibDeepController.mibDeepController | ( | mibModel, | |

| varargin ) |

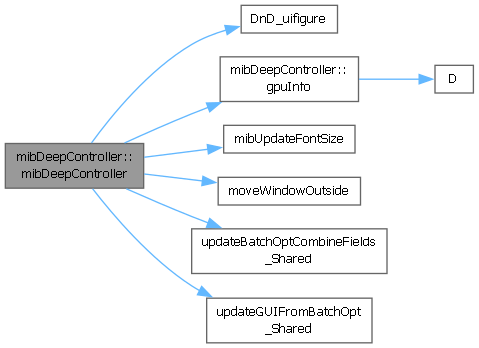

References handle.addlistener, DnD_uifigure(), gpuInfo(), mibModel, mibUpdateFontSize(), moveWindowOutside(), handle.notify, updateBatchOptCombineFields_Shared(), and updateGUIFromBatchOpt_Shared().

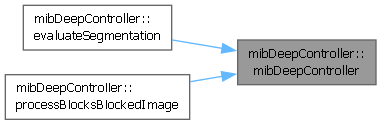

Referenced by evaluateSegmentation(), and processBlocksBlockedImage().

| function mibDeepController.activationLayerChangeCallback | ( | ) |

callback for modification of the Activation Layer dropdown

| function mibDeepController.balanceClasses | ( | ) |

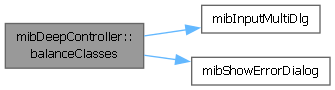

balance classes before training see example from here: https://se.mathworks.com/help/vision/ref/balancepixellabels.html

References mibInputMultiDlg(), and mibShowErrorDialog().

| function mibDeepController.bioformatsCallback | ( | event | ) |

update available filename extensions

| event | an event structure of appdesigner |

| function imgOut = mibDeepController.channelWisePreProcess | ( | imgIn | ) |

| function mibDeepController.checkNetwork | ( | fn | ) |

generate and check network using settings in the Train tab

| fn | optional string with filename (*.mibDeep) to preview its configuration |

References mibShowErrorDialog().

| function [ dataOut , info ] = mibDeepController.classificationAugmentationPipeline | ( | dataIn, | |

| info, | |||

| inputPatchSize, | |||

| outputPatchSize, | |||

| mode ) |

| function mibDeepController.closeWindow | ( | ) |

update preferences structure

References handle.isvalid, and handle.notify.

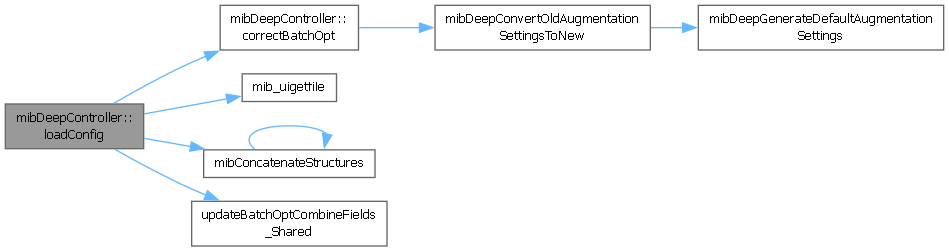

| function res = mibDeepController.correctBatchOpt | ( | res | ) |

correct loaded BatchOpt structure if it is not compatible with the current version of DeepMIB

| res | BatchOpt structure loaded from a file |

References mibDeepConvertOldAugmentationSettingsToNew().

Referenced by loadConfig().

| function mibDeepController.countLabels | ( | ) |

count occurrences of labels in model files callback for press of the "Count labels" in the Options panel

References mibDeepStoreLoadCategorical(), mibDeepStoreLoadModel(), and mibInputMultiDlg().

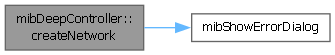

| function [ lgraph , outputPatchSize ] = mibDeepController.createNetwork | ( | previewSwitch | ) |

generate network Parameters: previewSwitch: logical switch, when 1 - the generated network is only for preview, i.e. weights of classes won't be calculated

| lgraph | network object |

| outputPatchSize | output patch size as [height, width, depth, color] |

References mibShowErrorDialog().

| function mibDeepController.customTrainingProgressWindow_Callback | ( | event | ) |

callback for click on obj.View.handles.O_CustomTrainingProgressWindow checkbox

| function mibDeepController.dnd_files_callback | ( | hWidget, | |

| dragIn ) |

drag and drop config name to obj.View.handles.NetworkPanel to load it

| hWidget | a handle to the object where the drag action landed |

| dragIn | a structure containing the dragged object .ctrlKey - 0/1 whether the control key was pressed .shiftKey - 0/1 whether the control key was pressed .names - cell array with filenames |

| function mibDeepController.duplicateConfigAndNetwork | ( | ) |

copy the network file and its config to a new filename

References BatchOpt, mib_uigetfile(), and wb.

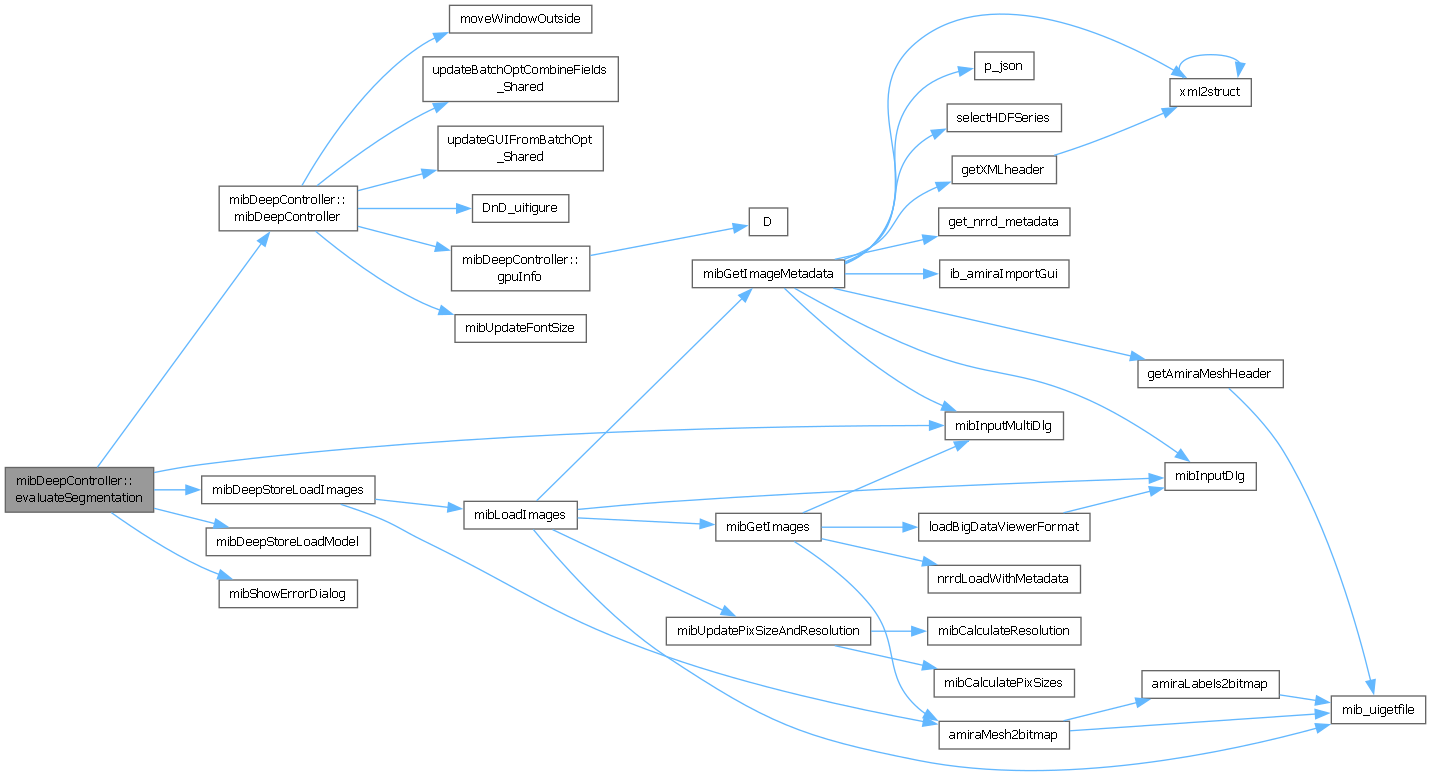

| function mibDeepController.evaluateSegmentation | ( | ) |

evaluate segmentation results by comparing predicted models with the ground truth models

References handle.fieldnames, max, mibDeepController(), mibDeepStoreLoadImages(), mibDeepStoreLoadModel(), mibInputMultiDlg(), and mibShowErrorDialog().

| function mibDeepController.evaluateSegmentationPatches | ( | ) |

evaluate segmentation results for the patches in the patch-wise mode

References C().

| function mibDeepController.exploreActivations | ( | ) |

explore activations within the trained network

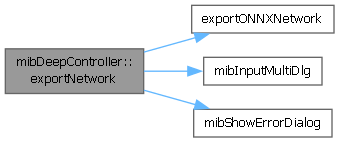

| function mibDeepController.exportNetwork | ( | ) |

convert and export network to ONNX or TensorFlow formats

References exportONNXNetwork(), mibInputMultiDlg(), mibShowErrorDialog(), and wb.

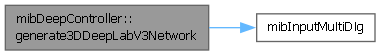

| function net = mibDeepController.generate3DDeepLabV3Network | ( | imageSize, | |

| numClasses, | |||

| downsamplingFactor, | |||

| targetNetwork ) |

generate a hybrid 2.5D DeepLabv3+ convolutional neural network for semantic image segmentation. The training data should be a small substack of 3,5,7 etc slices, where only the middle slice is segmented. As the update, a 2 blocks of 3D convolutions were added before the standard DLv3

| imageSize | vector [height, width, colors] defining input patch size, should be larger than [224 224] for resnet18, colors should be 3 |

| numClasses | number of output classes (including exterior) for the output results |

| targetNetwork | string defining the base architecture for the initialization resnet18 - resnet18 network resnet50 - resnet50 network xception - xception network inceptionresnetv2 - resnet50 network |

References mibInputMultiDlg().

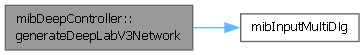

| function net = mibDeepController.generateDeepLabV3Network | ( | imageSize, | |

| numClasses, | |||

| targetNetwork ) |

generate DeepLab v3+ convolutional neural network for semantic image segmentation of 2D RGB images

| imageSize | vector [height, width, colors] defining input patch size, should be larger than [224 224] for resnet18, colors should be 3 |

| numClasses | number of output classes (including exterior) for the output results |

| targetNetwork | string defining the base architecture for the initialization resnet18 - resnet18 network resnet50 - resnet50 network xception - xception network (requires matlab) inceptionresnetv2 - inceptionresnetv2 network (required matlab) |

References max, and mibInputMultiDlg().

| function bls = mibDeepController.generateDynamicMaskingBlocks | ( | vol, | |

| blockSize, | |||

| noColors ) |

generate blocks using dynamic masking parameters acquired in obj.DynamicMaskOpt

| vol | blocked image to process |

| blockSize | block size |

| noColors | number of color channels in the blocked image |

| bls | calculated blockLocationSet .ImageNumber .BlockOrigin .BlockSize .Levels |

References max.

| function [ net , outputSize ] = mibDeepController.generateUnet2DwithEncoder | ( | imageSize, | |

| encoderNetwork ) |

generate Unet convolutional neural network for semantic image segmentation of 2D RGB images using a specified encoder

| imageSize | vector [height, width, colors] defining input patch size, should be larger than [224 224] for Resnet18, colors should be 3 |

| encoderNetwork | string defining the base architecture for the initialization Classic - classic unet architecture Resnet18 - Resnet18 network Resnet50 - Resnet50 network |

| net | Unet dlnetwork, with softmax (Name: FinalNetworkSoftmax-Layer) as the final layer |

| outputSize | output size of the network returned as [height, width, number of classes] Updates |

| function mibDeepController.gpuInfo | ( | ) |

display information about the selected GPU

References D(), and handle.fieldnames.

Referenced by mibDeepController().

| function mibDeepController.helpButton_callback | ( | ) |

show Help sections

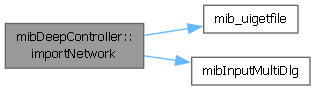

| function mibDeepController.importNetwork | ( | ) |

import an externally trained or designed network to be used with DeepMIB

resnet18); % save network to a file save(myNewNetwork.mat, net, -mat); % use Import opetation to load and adapt the network for use with DeepMIB References ActivationLayerOpt, BatchOpt, DynamicMaskOpt, handle.fieldnames, InputLayerOpt, mib_uigetfile(), mibInputMultiDlg(), SegmentationLayerOpt, and wb.

| function mibDeepController.loadConfig | ( | configName | ) |

load config file with Deep MIB settings

| configName | full filename for the config file to load |

References correctBatchOpt(), mib_uigetfile(), mibConcatenateStructures(), and updateBatchOptCombineFields_Shared().

| function [ patchOut , info , augList , augPars ] = mibDeepController.mibDeepAugmentAndCrop2dPatchMultiGPU | ( | info, | |

| inputPatchSize, | |||

| outputPatchSize, | |||

| mode, | |||

| options ) |

| function [ patchOut , info , augList , augPars ] = mibDeepController.mibDeepAugmentAndCrop3dPatchMultiGPU | ( | info, | |

| inputPatchSize, | |||

| outputPatchSize, | |||

| mode, | |||

| options ) |

| function TrainingOptions = mibDeepController.preprareTrainingOptions | ( | valDS | ) |

prepare trainig options for the network training

| valDS | datastore with images for validation |

References mibShowErrorDialog().

| function TrainingOptions = mibDeepController.preprareTrainingOptionsInstances | ( | valDS | ) |

prepare trainig options for training of the instance segmentation network

| valDS | datastore with images for validation |

References mibShowErrorDialog().

| function mibDeepController.previewDynamicMask | ( | ) |

preview results for the dynamic mode

References wb.

| function mibDeepController.previewImagePatches_Callback | ( | event | ) |

callback for value change of obj.View.handles.O_PreviewImagePatches

| function mibDeepController.previewModels | ( | loadImagesSwitch | ) |

load images for predictions and the resulting modelsinto MIB

| loadImagesSwitch | [logical], load or not (assuming that images have already been preloaded) images. When true, both images and models are loaded, when false - only models are loaded |

| function mibDeepController.previewPredictions | ( | ) |

load images of prediction scores into MIB

| function [ outputLabels , scoreImg ] = mibDeepController.processBlocksBlockedImage | ( | vol, | |

| zValue, | |||

| net, | |||

| inputPatchSize, | |||

| outputPatchSize, | |||

| blockSize, | |||

| padShift, | |||

| dataDimension, | |||

| patchwiseWorkflowSwitch, | |||

| patchwisePatchesPredictSwitch, | |||

| classNames, | |||

| generateScoreFiles, | |||

| executionEnvironment, | |||

| fn ) |

dataDimension: numeric switch that identify dataset dimension, can be 2, 2.5, 3

References max, mibDeepController(), and handle.permute.

| function mibDeepController.processImages | ( | preprocessFor | ) |

Preprocess images for training and prediction.

| preprocessFor | a string with target, training, prediction |

References amiraMesh2bitmap(), mibDeepStoreLoadImages(), mibDeepStoreLoadModel(), mibLoadImages(), mibShowErrorDialog(), handle.permute, and saveImageParFor().

| function mibDeepController.returnBatchOpt | ( | BatchOptOut | ) |

return structure with Batch Options and possible configurations via the notify syncBatch event Parameters: BatchOptOut: a local structure with Batch Options generated during Continue callback. It may contain more fields than obj.BatchOpt structure

References handle.notify.

| function mibDeepController.saveCheckpointNetworkCheck | ( | ) |

callback for press of Save checkpoint networks (obj.View.handles.T_SaveProgress)

References mibInputMultiDlg().

| function mibDeepController.saveConfig | ( | configName | ) |

save Deep MIB configuration to a file

| configName | [optional] string, full filename to the config file |

References ActivationLayerOpt, BatchOpt, convertAbsoluteToRelativePath(), DynamicMaskOpt, InputLayerOpt, and SegmentationLayerOpt.

|

static |

test function for utilization of blockedImage for prediction The input block will be a batch of blocks from the a blockedImage.

.BlockSub: [1 1 1] .Start: [1 1 1] .End: [224 224 3] .Level: 1 .ImageNumber: 1 .BorderSize: [0 0 0] .BlockSize: [224 224 3] .BatchSize: 1 .Data: [224×224×3 uint8]

net: a trained DAGNetwork dataDimension: numeric switch that identify dataset dimension, can be 2, 2.5, 3 patchwiseWorkflowSwitch: logical switch indicating the patch-wise mode, when true->use patch mode, when false->use semantic segmentation generateScoreFiles: variable to generate score files with probabilities of classes 0-> do not generate 1-> Use AM format 2-> Use Matlab non-compressed format 3-> Use Matlab compressed format 4-> Use Matlab non-compressed format (range 0-1) executionEnvironment: string with the environment to execute prediction padShift: numeric, (y,x,z or y,x) value for the padding, used during the overlap mode to crop the output patch for export

| block | a structure with a block that is provided by blockedImage/apply. The first and second iterations have batch size==1, while the following have the batch size equal to the selected. Below fields of the structure, |

References handle.permute, and handle.reshape.

| function mibDeepController.selectArchitecture | ( | event | ) |

select the target architecture

| function mibDeepController.selectDirerctories | ( | event | ) |

| function mibDeepController.selectGPUDevice | ( | ) |

select environment for computations

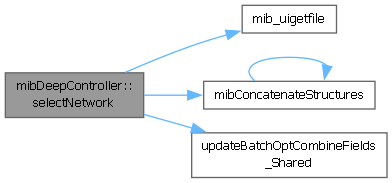

| function net = mibDeepController.selectNetwork | ( | networkName | ) |

select a filename for a new network in the Train mode, or select a network to use for the Predict mode

| networkName | optional parameter with the network full filename |

| net | trained network |

References handle.fieldnames, mib_uigetfile(), mibConcatenateStructures(), and updateBatchOptCombineFields_Shared().

| function mibDeepController.selectWorkflow | ( | event | ) |

select deep learning workflow to perform

| function mibDeepController.sendReportsCallback | ( | ) |

define parameters for sending progress report to the user's email address

References mibInputMultiDlg().

| function mibDeepController.setActivationLayerOptions | ( | ) |

update options for the activation layers

References mibInputMultiDlg().

| function [ status , augNumber ] = mibDeepController.setAugFuncHandles | ( | mode, | |

| augOptions ) |

define list of 2D/3D augmentation functions

| mode | string defining 2D or 3D augmentations |

| augOptions | a custom temporary structure with augmentation options to be used instead of obj.AugOpt2D and obj.AugOpt3D. It is used by mibDeepAugmentSettingsController to preview selected augmentations |

| status | a logical success switch (1-success, 0- fail) |

| augNumber | number of selected augmentations |

References handle.fieldnames.

| function mibDeepController.setAugmentation2DSettings | ( | ) |

update settings for augmentation fo 2D images

References mibDeepConvertOldAugmentationSettingsToNew().

| function mibDeepController.setAugmentation3DSettings | ( | ) |

update settings for augmentation fo 3D images

References mibDeepConvertOldAugmentationSettingsToNew().

| function mibDeepController.setInputLayerSettings | ( | ) |

update init settings for the input layer of networks

References mibInputMultiDlg(), and handle.reshape.

| function mibDeepController.setSegmentationLayer | ( | ) |

callback for modification of the Segmentation Layer dropdown

| function mibDeepController.setSegmentationLayerOptions | ( | ) |

update options for the activation layers

References mibInputMultiDlg().

| function mibDeepController.setTrainingSettings | ( | ) |

update settings for training of networks

References mibInputMultiDlg().

| function mibDeepController.singleModelTrainingFileValueChanged | ( | event | ) |

callback for press of SingleModelTrainingFile

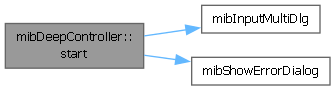

| function mibDeepController.start | ( | event | ) |

start calcualtions, depending on the selected tab preprocessing, training, or prediction is initialized

References mibInputMultiDlg(), and mibShowErrorDialog().

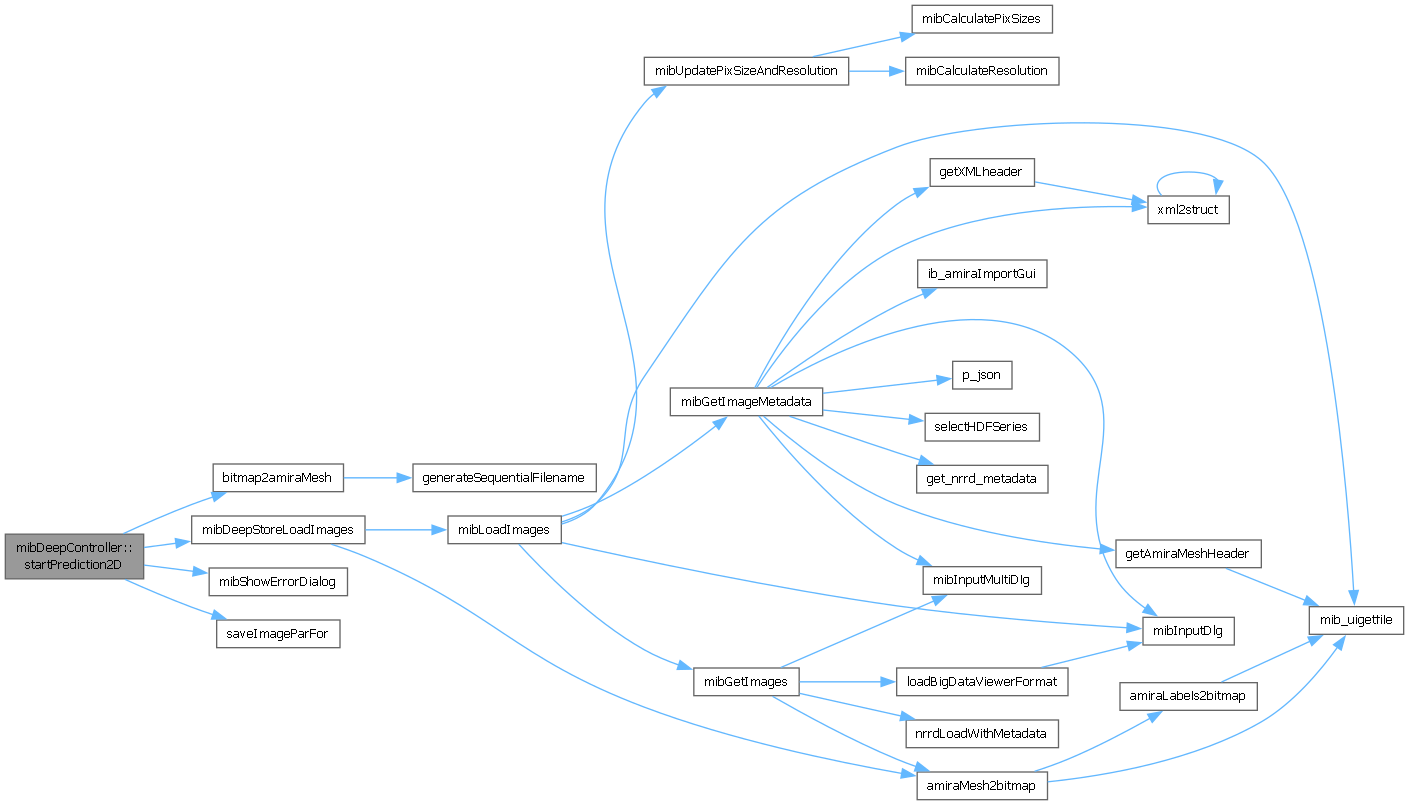

| function mibDeepController.startPrediction2D | ( | ) |

predict datasets for 2D taken to a separate function for better performance

References bitmap2amiraMesh(), max, mibDeepStoreLoadImages(), mibShowErrorDialog(), modelMaterialColors, handle.notify, and saveImageParFor().

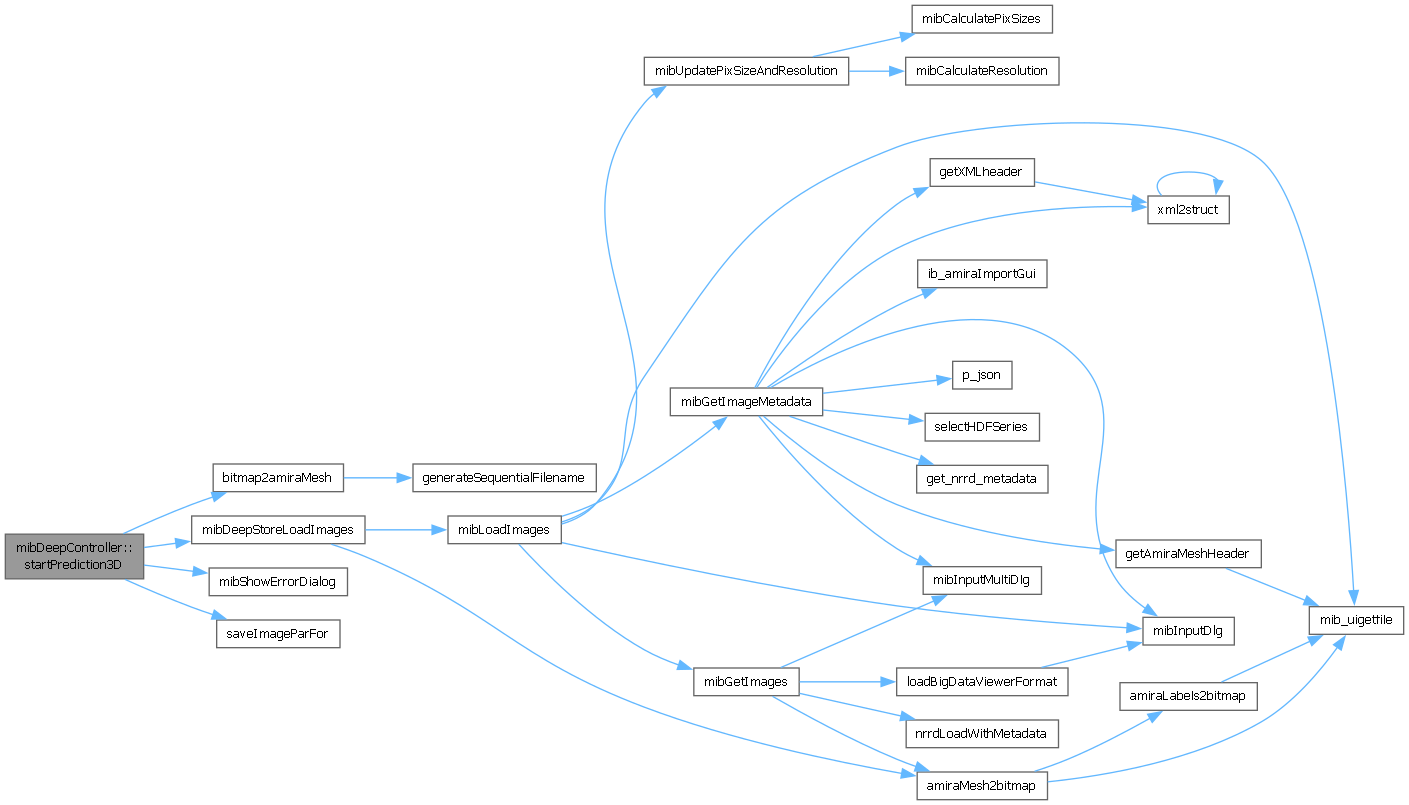

| function mibDeepController.startPrediction3D | ( | ) |

predict datasets for 3D networks taken to a separate function to improve performance

References bitmap2amiraMesh(), max, mibDeepStoreLoadImages(), mibShowErrorDialog(), min, modelMaterialColors, handle.notify, handle.permute, and saveImageParFor().

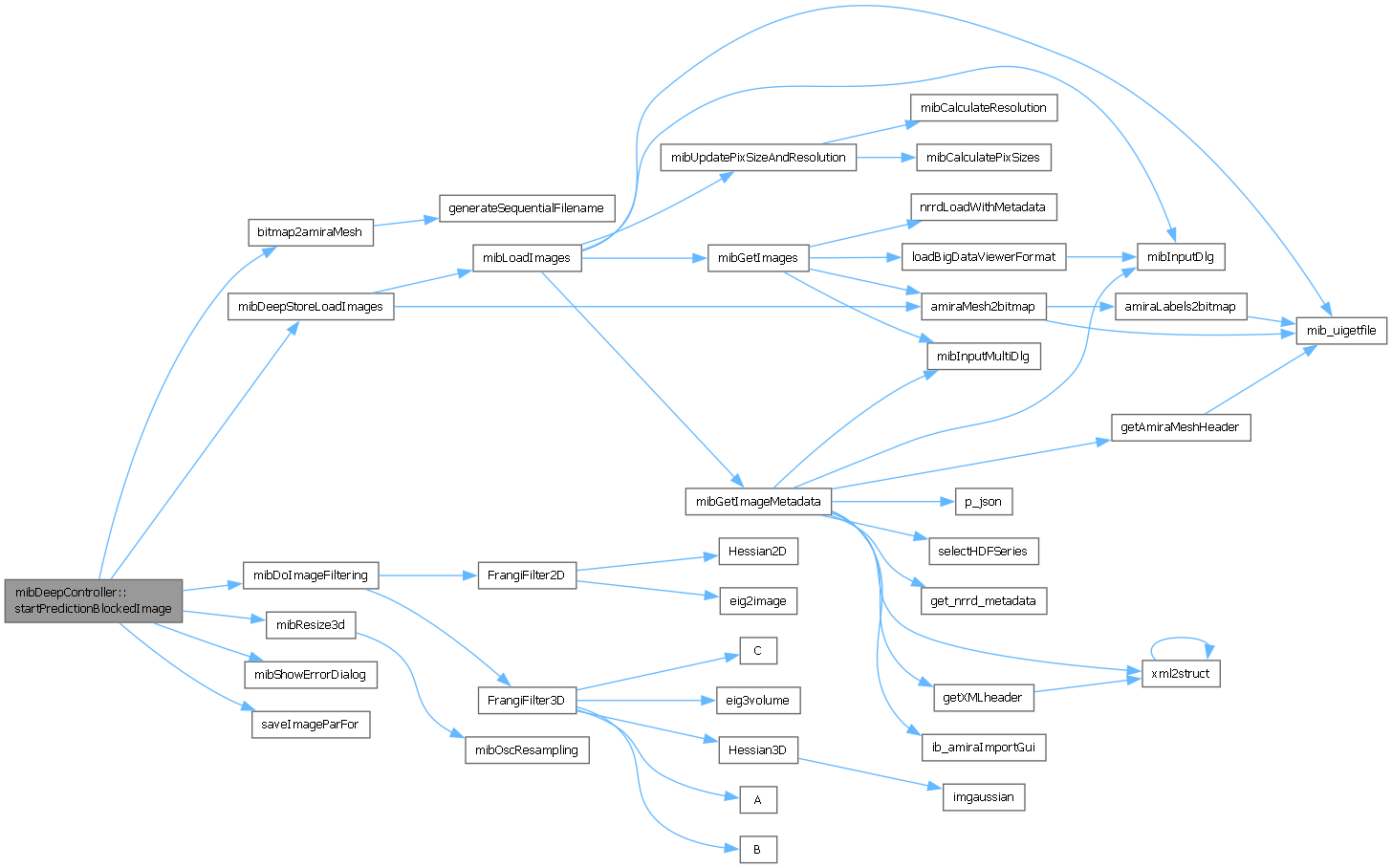

| function mibDeepController.startPredictionBlockedImage | ( | ) |

predict 2D/3D datasets using the blockedImage class requires R2021a or newer

References bitmap2amiraMesh(), max, mibDeepStoreLoadImages(), mibDoImageFiltering(), mibResize3d(), mibShowErrorDialog(), min, modelMaterialColors, handle.notify, and saveImageParFor().

| function mibDeepController.startPreprocessing | ( | ) |

|

static |

data = tif3DFileRead(filename) custom reading function to load tif files with stack of images used in evaluate segmentation function

| function mibDeepController.toggleAugmentations | ( | ) |

callback for press of the T_augmentation checkbox

| function mibDeepController.transferLearning | ( | ) |

perform fine-tuning of the loaded network to a different number of classes

References mibInputMultiDlg().

| function lgraph = mibDeepController.updateActivationLayers | ( | lgraph | ) |

update the activation layers depending on settings in obj.BatchOpt.T_ActivationLayer and obj.ActivationLayerOpt

| function mibDeepController.updateBatchOptFromGUI | ( | event | ) |

| function lgraph = mibDeepController.updateConvolutionLayers | ( | lgraph | ) |

update the convolution layers by providing new set of weight initializers

| function mibDeepController.updateDynamicMaskSettings | ( | ) |

update settings for calculation of dynamic masks during prediction using blockedimage mode the settings are stored in obj.DynamicMaskOpt

References mibInputMultiDlg().

| function mibDeepController.updateImageDirectoryPath | ( | event | ) |

update directories with images for training, prediction and results

| function lgraph = mibDeepController.updateMaxPoolAndTransConvLayers | ( | lgraph, | |

| poolSize ) |

update maxPool and TransposedConvolution layers depending on network downsampling factor only for U-net and SegNet. This function is applied when the network downsampling factor is different from 2

| function lgraph = mibDeepController.updateNetworkInputLayer | ( | lgraph, | |

| inputPatchSize ) |

update the input layer settings for lgraph paramters are taken from obj.InputLayerOpt

References handle.reshape.

| function mibDeepController.updatePreprocessingMode | ( | ) |

callback for change of selection in the Preprocess for dropdown

| function lgraph = mibDeepController.updateSegmentationLayer | ( | lgraph, | |

| classNames ) |

redefine the segmentation layer of lgraph based on obj.BatchOpt settings

| classNames | cell array with class names, when not provided is auto switch is used |

| function mibDeepController.updateWidgets | ( | ) |

update widgets of this window

References updateGUIFromBatchOpt_Shared().

|

static |

| mibDeepController.ActivationLayerOpt |

options for the activation layer

Referenced by importNetwork(), and saveConfig().

| mibDeepController.Aug2DFuncNames |

cell array with names of 2D augmenter functions

| mibDeepController.Aug2DFuncProbability |

probabilities of each 2D augmentation action to be triggered

| mibDeepController.Aug3DFuncNames |

cell array with names of 2D augmenter functions

| mibDeepController.Aug3DFuncProbability |

probabilities of each 3D augmentation action to be triggered

| mibDeepController.AugOpt2D |

a structure with augumentation options for 2D unets, default obtained from obj.mibModel.preferences.Deep.AugOpt2D, see getDefaultParameters.m .FillValue = 0; .RandXReflection = true; .RandYReflection = true; .RandRotation = [-10, 10]; .RandScale = [.95 1.05]; .RandXScale = [.95 1.05]; .RandYScale = [.95 1.05]; .RandXShear = [-5 5]; .RandYShear = [-5 5];

| mibDeepController.AugOpt3D |

.Fraction = .6; % augment 60% of patches .FillValue = 0; .RandXReflection = true; .RandYReflection = true; .RandZReflection = true; .Rotation90 = true; .ReflectedRotation90 = true;

| mibDeepController.availableArchitectures |

containers.Map with available architectures keySet = {2D Semantic, 2.5D Semantic, 3D Semantic, 2D Patch-wise, 2D Instance}; valueSet{1} = {DeepLab v3+, SegNet, U-net, U-net +Encoder}; old: {U-net, SegNet, DLv3 Resnet18, DLv3 Resnet50, DLv3 Xception, DLv3 Inception-ResNet-v2} valueSet{2} = {Z2C + DLv3, Z2C + DLv3, Z2C + U-net, Z2C + U-net +Encoder}; % 3DC + DLv3 Resnet18' valueSet{3} = {U-net, U-net Anisotropic} valueSet{4} = {Resnet18, Resnet50, Resnet101, Xception} valueSet{5} = {SOLOv2} old: {SOLOv2 Resnet18, SOLOv2 Resnet50};

| mibDeepController.availableEncoders |

containers.Map with available encoders keySet - is a mixture of workflow -space- architecture {2D Semantic DeepLab v3+, 2D Semantic U-net +Encoder} encoders for each "workflow -space- architecture" combination, the last value shows the selected encoder encodersList{1} = {Resnet18, Resnet50, Xception, InceptionResnetv2, 1}; % for 2D Semantic DeepLab v3+ encodersList{2} = {Classic, Resnet18, Resnet50, 2}; % for 2D Semantic U-net +Encoder encodersList{3} = {Resnet18, Resnet50, 1}; % for 2D Instance SOLOv2'

| mibDeepController.BatchOpt |

a structure compatible with batch operation name of each field should be displayed in a tooltip of GUI it is recommended that the Tags of widgets match the name of the fields in this structure .Parameter - [editbox], char/string .Checkbox - [checkbox], logical value true or false .Dropdown{1} - [dropdown], cell string for the dropdown .Dropdown{2} - [optional], an array with possible options .Radio - [radiobuttons], cell string Radio1 or Radio2... .ParameterNumeric{1} - [numeric editbox], cell with a number .ParameterNumeric{2} - [optional], vector with limits [min, max] .ParameterNumeric{3} - [optional], string on - to round the value, off to do not round the value

Referenced by duplicateConfigAndNetwork(), importNetwork(), and saveConfig().

| mibDeepController.childControllers |

list of opened subcontrollers

| mibDeepController.childControllersIds |

a cell array with names of initialized child controllers

| EVENT mibDeepController.closeEvent |

> Description of events event firing when window is closed

| mibDeepController.colormap20 |

colormap for 20 colors

| mibDeepController.colormap255 |

colormap for 255 colors

| mibDeepController.colormap6 |

colormap for 6 colors

| mibDeepController.DynamicMaskOpt |

options for calculation of dynamic masks for prediction using blocked image mode .Method = Keep above threshold; % Keep above threshold or Keep below threshold .ThresholdValue = 60; .InclusionThreshold = 0.1; % Inclusion threshold for mask blocks

Referenced by importNetwork(), and saveConfig().

| mibDeepController.gpuInfoFig |

a handle for GPU info window

| mibDeepController.InputLayerOpt |

a structure with settings for the input layer .Normalization = zerocenter; .Mean = []; .StandardDeviation = []; .Min = []; .Max = [];

Referenced by importNetwork(), and saveConfig().

| mibDeepController.listener |

a cell array with handles to listeners

| mibDeepController.mibController |

handle to mib controller

| mibDeepController.mibModel |

handles to mibModel

Referenced by mibDeepController().

| mibDeepController.modelMaterialColors |

colors of materials

Referenced by startPrediction2D(), startPrediction3D(), and startPredictionBlockedImage().

| mibDeepController.PatchPreviewOpt |

structure with preview patch options .noImages = 9, number of images in montage .imageSize = 160, patch size for preview .labelShow = true, display overlay labels with details .labelSize = 9, font size for the label .labelColor = black, color of the label .labelBgColor = yellow, color of the label background .labelBgOpacity = 0.6; % opacity of the background

| mibDeepController.SegmentationLayerOpt |

options for the segmentation layer

Referenced by importNetwork(), and saveConfig().

| mibDeepController.SendReports |

send email reports with progress of the training process .T_SendReports = false; .FROM_email = 'user@.nosp@m.gmai.nosp@m.l.com'; .SMTP_server = smtp-relay.brevo.com; .SMTP_port = 587; .SMTP_auth = true; .SMTP_starttls = true; .SMTP_sername = 'user@.nosp@m.gmai.nosp@m.l.com'; .SMTP_password = '; .sendWhenFinished = false; .sendDuringRun = false;

| mibDeepController.sessionSettings |

structure for the session settings .countLabelsDir - directory with labels to count, used in count labels function

| mibDeepController.TrainEngine |

temp property to test trainnet function for training: can be trainnet or trainNetwork

| mibDeepController.TrainingOpt |

a structure with training options, the default ones are obtained from obj.mibModel.preferences.Deep.TrainingOpt, see getDefaultParameters.m .solverName = adam; .MaxEpochs = 50; .Shuffle = once; .InitialLearnRate = 0.0005; .LearnRateSchedule = piecewise; .LearnRateDropPeriod = 10; .LearnRateDropFactor = 0.1; .L2Regularization = 0.0001; .Momentum = 0.9; .ValidationFrequency = 400; .Plots = training-progress;

| mibDeepController.TrainingProgress |

a structure to be used for the training progress plot for the compiled version of MIB .maxNoIter = max number of iteractions for during training .iterPerEpoch - iterations per epoch .stopTraining - logical switch that forces to stop training and save all progress

| mibDeepController.View |

handle to the view / mibDeepGUI

| mibDeepController.wb |

handle to waitbar

Referenced by duplicateConfigAndNetwork(), exportNetwork(), importNetwork(), previewDynamicMask(), and startPreprocessing().